Bayes Theorem Is Used To Compute

Juapaving

Mar 22, 2025 · 6 min read

Table of Contents

Bayes' Theorem: Unveiling the Power of Conditional Probability

Bayes' Theorem, a cornerstone of probability theory, isn't just a mathematical formula; it's a powerful tool for updating our beliefs in light of new evidence. It allows us to compute the posterior probability, a revised probability of an event occurring based on prior knowledge and new data. Understanding how Bayes' Theorem is used to compute these probabilities is crucial across various fields, from medicine and finance to artificial intelligence and spam filtering. This comprehensive guide will delve into its applications, calculations, and the underlying intuition behind this fundamental theorem.

Understanding the Fundamentals: Prior, Likelihood, and Posterior

Before diving into the calculations, let's establish the key components of Bayes' Theorem:

-

Prior Probability (P(A)): This represents our initial belief about the probability of an event A occurring before considering any new evidence. It's our best guess based on existing knowledge or assumptions.

-

Likelihood (P(B|A)): This is the probability of observing evidence B, given that event A has already occurred. It quantifies how likely the observed evidence is if our hypothesis (event A) is true.

-

Posterior Probability (P(A|B)): This is the ultimate goal – the revised probability of event A occurring after considering the new evidence B. It represents our updated belief about A based on the observed data.

-

Evidence Probability (P(B)): This is the probability of observing the evidence B, regardless of whether event A occurred or not. It acts as a normalizing factor to ensure the posterior probability is a valid probability (between 0 and 1). This can often be calculated using the law of total probability.

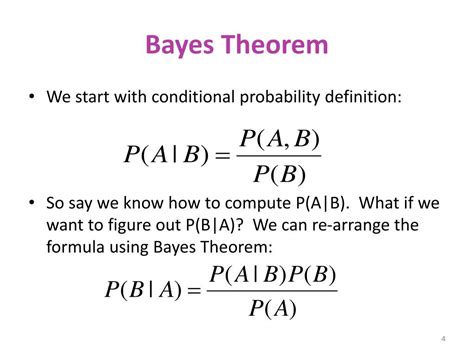

The Bayes' Theorem Formula: A Mathematical Representation

The relationship between these components is elegantly captured by Bayes' Theorem:

P(A|B) = [P(B|A) * P(A)] / P(B)

This formula allows us to calculate the posterior probability P(A|B) using the prior probability P(A), the likelihood P(B|A), and the evidence probability P(B).

Calculating P(B): The Law of Total Probability

Often, directly calculating P(B) can be challenging. This is where the Law of Total Probability comes in handy. It allows us to break down P(B) into mutually exclusive possibilities related to event A.

If A and its complement (¬A) are mutually exclusive and exhaustive events, then:

P(B) = P(B|A) * P(A) + P(B|¬A) * P(¬A)

This formula expands P(B) into the probability of B given A, weighted by the probability of A, plus the probability of B given not A, weighted by the probability of not A. This allows for a more manageable calculation of P(B).

Practical Applications: Unveiling the Power of Bayes' Theorem

The versatility of Bayes' Theorem is evident in its wide range of applications:

1. Medical Diagnosis:

Imagine a test for a rare disease. Let's say:

- P(Disease) = 0.01 (Prior: 1% prevalence)

- P(Positive Test|Disease) = 0.95 (Likelihood: 95% accuracy if you have the disease)

- P(Positive Test|No Disease) = 0.05 (Likelihood: 5% false positive rate)

If someone tests positive, what's the probability they actually have the disease? Using Bayes' Theorem, we can calculate the posterior probability.

First, we find P(Positive Test) using the Law of Total Probability:

P(Positive Test) = P(Positive Test|Disease) * P(Disease) + P(Positive Test|No Disease) * P(No Disease) = (0.95 * 0.01) + (0.05 * 0.99) = 0.059

Now we can use Bayes' Theorem:

P(Disease|Positive Test) = (0.95 * 0.01) / 0.059 ≈ 0.16

Even with a seemingly accurate test, the probability of actually having the disease given a positive test is only around 16%. This highlights the importance of considering prior probabilities, especially when dealing with rare events.

2. Spam Filtering:

Email spam filters utilize Bayes' Theorem to classify emails as spam or not spam.

- Prior: A prior probability is assigned to an email being spam based on historical data (e.g., 10% of emails are typically spam).

- Likelihood: The presence of specific words or phrases (e.g., "free money," "prize") increases the likelihood of an email being spam. This likelihood is calculated based on the frequency of these words in spam and non-spam emails.

- Posterior: Bayes' Theorem combines the prior and likelihood to compute the posterior probability that an email is spam. If this probability exceeds a certain threshold, the email is classified as spam.

3. Finance and Risk Assessment:

Bayes' Theorem helps assess financial risk by incorporating new information into existing risk models. For example, it can be used to update the probability of a loan defaulting based on the borrower's credit history and recent economic indicators.

4. Machine Learning and Artificial Intelligence:

Bayesian methods are fundamental to many machine learning algorithms. Naive Bayes classifiers, for instance, use Bayes' Theorem to classify data points based on features. These classifiers are widely used in text categorization, image recognition, and medical diagnosis.

5. Weather Forecasting:

Meteorologists utilize Bayesian networks to integrate various weather data sources (satellite imagery, radar, ground observations) and update probability forecasts of future weather conditions. The prior might be a long-term weather pattern, and new observations are used to update the posterior probability of specific weather events.

Beyond the Basics: Addressing Challenges and Refinements

While Bayes' Theorem is a powerful tool, several considerations are crucial for accurate and effective application:

-

Accurate Prior Probabilities: The accuracy of the posterior probability is heavily reliant on the accuracy of the prior. If the prior is incorrect, the results will be misleading. Careful consideration must be given to obtaining reliable prior probabilities, possibly through historical data, expert opinions, or other relevant information.

-

Data Quality: The quality of the data used to compute likelihoods is paramount. Inaccurate or biased data will lead to flawed results. Data cleaning, validation, and rigorous quality control are essential.

-

Computational Complexity: For complex problems with many variables, calculating P(B) using the Law of Total Probability can become computationally expensive. Approximation techniques, such as Monte Carlo methods, might be necessary.

-

Subjectivity of Priors: In some cases, choosing appropriate prior probabilities might involve subjective judgments. Sensitivity analysis can help assess how much the posterior is affected by changes in the prior.

Conclusion: A Versatile Tool for Updating Beliefs

Bayes' Theorem is not merely a mathematical formula; it's a framework for rationally updating our beliefs based on new evidence. Its wide-ranging applicability across diverse fields underscores its importance in probabilistic reasoning. By understanding its components, calculations, and limitations, we can harness the power of Bayes' Theorem for better decision-making and more accurate predictions in an uncertain world. Mastering Bayes' Theorem is a significant step toward a deeper understanding of probability and its practical applications. It empowers us to move beyond simple probabilistic statements and incorporate new information dynamically to refine our understanding of the world around us.

Latest Posts

Latest Posts

-

Direction Of The Rotation Of The Earth

Mar 23, 2025

-

What Is The Lcm Of 4 6 8

Mar 23, 2025

-

Transitive And Intransitive Verbs Exercises With Answers

Mar 23, 2025

-

Square Root Of 44 In Radical Form

Mar 23, 2025

-

Is Sodium Chloride Covalent Or Ionic

Mar 23, 2025

Related Post

Thank you for visiting our website which covers about Bayes Theorem Is Used To Compute . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.